Overview

Help security teams monitor AI agent in their environment and respond to risks with ease.

Delivered under intense time pressure, shifting requirements, and complex stakeholder alignment, this project represents the flagship design effort behind Palo Alto Networks’ most strategic AI security initiative.

Outcome

Launched as a top-tier company announcement

Featured prominently across public-facing channels

Highlighted at RSA, the world’s largest cybersecurity conference

Established the UX direction for a new category in enterprise AI security

My role

Sole Product Design Lead

Stakeholders

3 Product Managers

10 Engineers

Timeline

Feb 2025 - Nov 2025 (10 months)

Highlights

I led the 0-1 product vision & craft to safeguard the enterprise use of AI Agents.

Below are key highlights from a 0→1 AI security initiative, where I led product vision and design to help enterprise security teams gain visibility and control over AI agent risk.

Context

AI agents move fast. Security needs to keep up.

In the past months, thousands of AI agents have entered enterprise environments—introducing risks that traditional devices and user-based security models were not designed to handle.

AI adoption is outpacing enterprise security models.

Initial Problem Space (Ambiguous)

How could we protect enterprise users from the risks of AI agents?

At the start of the project, it was unclear:

What are risk looked like in AI agent behavior?

Where responsibility should sit between humans and agents?

What does security teams needed? (Such as prevention, monitoring, or intervention tools…)

Without a shared understanding of how AI agents were used in real workflows, the problem remained broad and ill-defined.

Research & Design

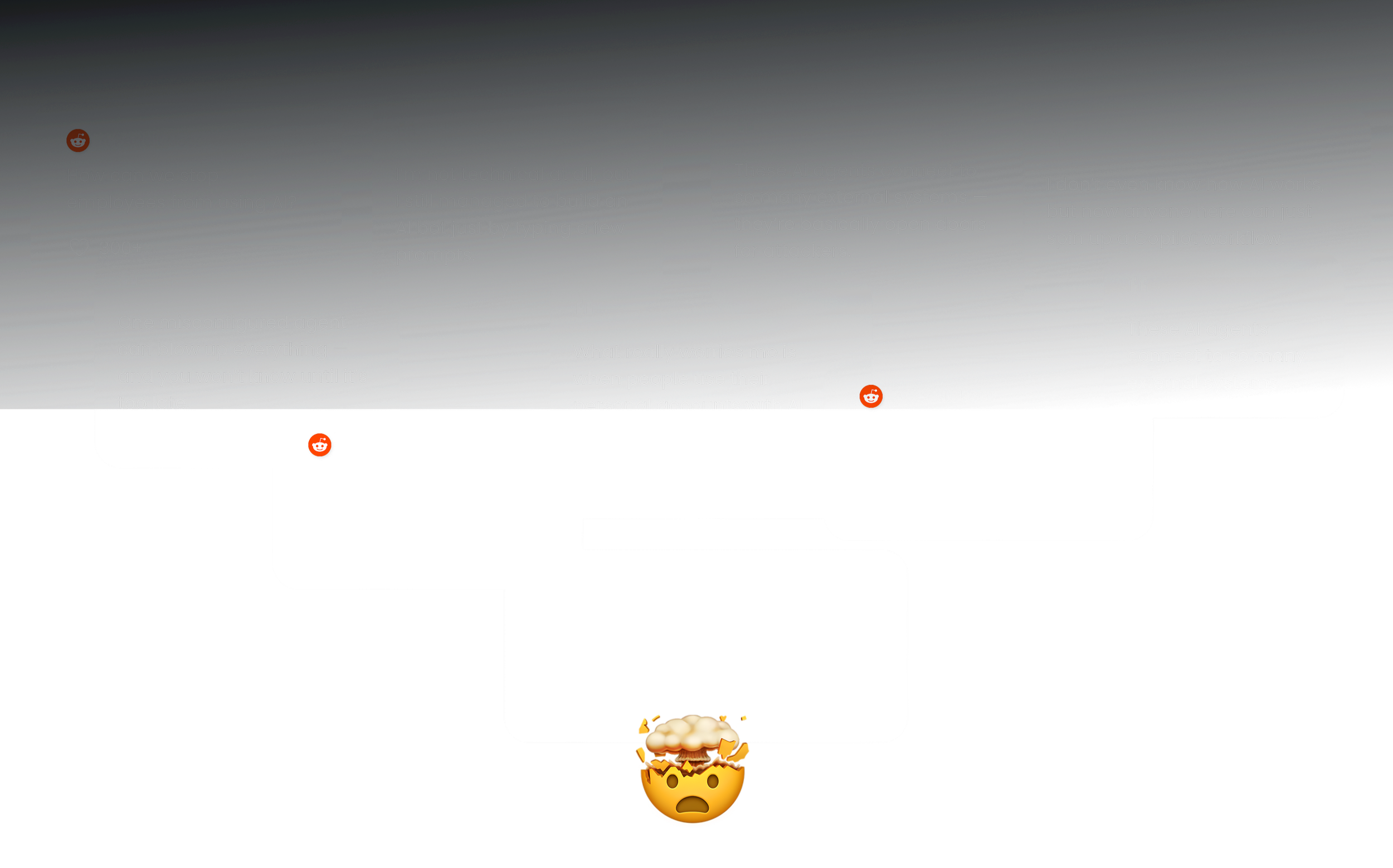

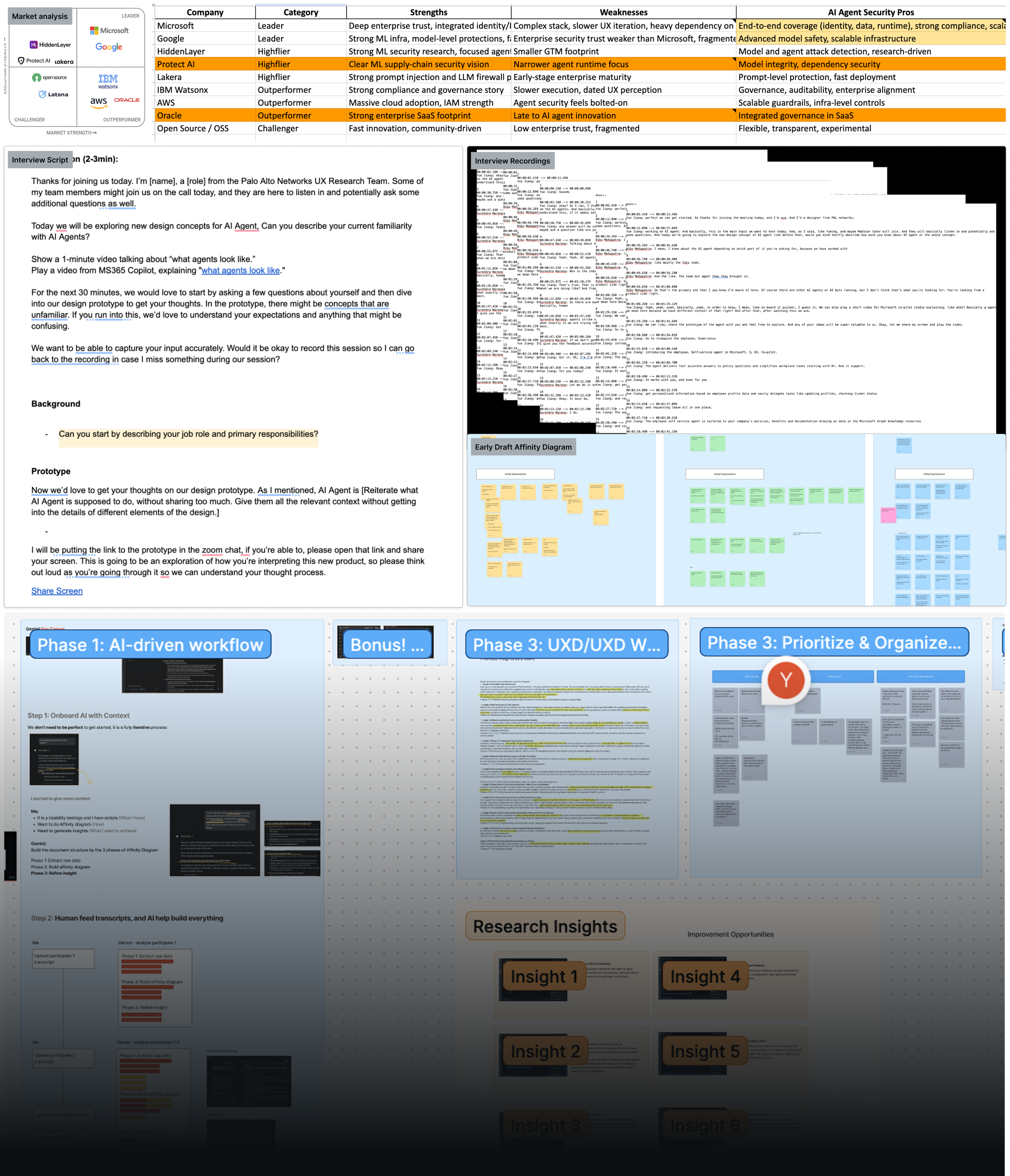

Verify assumptions, understand users’ workflows in depth through interviews, competitive analysis and research papers.

Faced with the super tight timeline, I made assumptions based on competitive analysis and rapidly delivered the first high-fidelity prototype. I then initiated and drove 8 interviews and multiple stakeholder alignments to enhance the understanding on users’ workflows and business goals.

Reframed Problem (Post-Research)

How might we give security teams clear visibility and actionable control over AI agents—without slowing down safe autonomy?

Through research, the problem shifted from mitigating “AI risk” to addressing a more fundamental gap: security teams lacked visibility into agent behavior and the ability to act with confidence.

The challenge became designing an experience that surfaces meaningful risk signals and enables timely intervention—without slowing down safe automation.

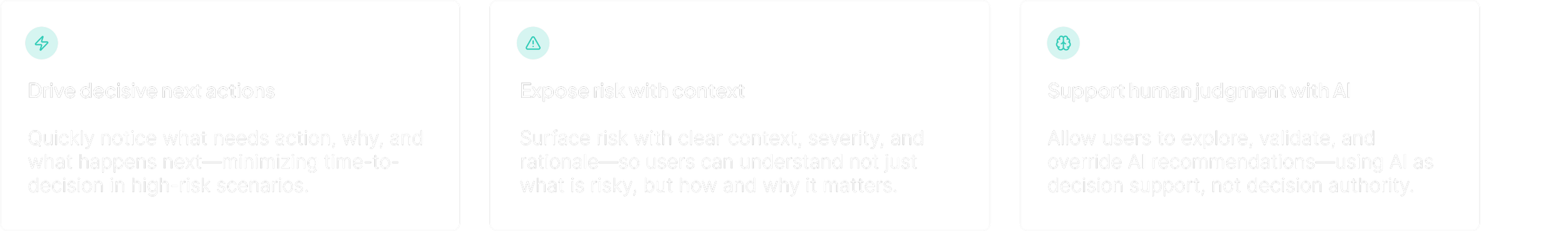

Design principles

Design principles generated from research